Football Analytics - Overall Model Performance Comparison

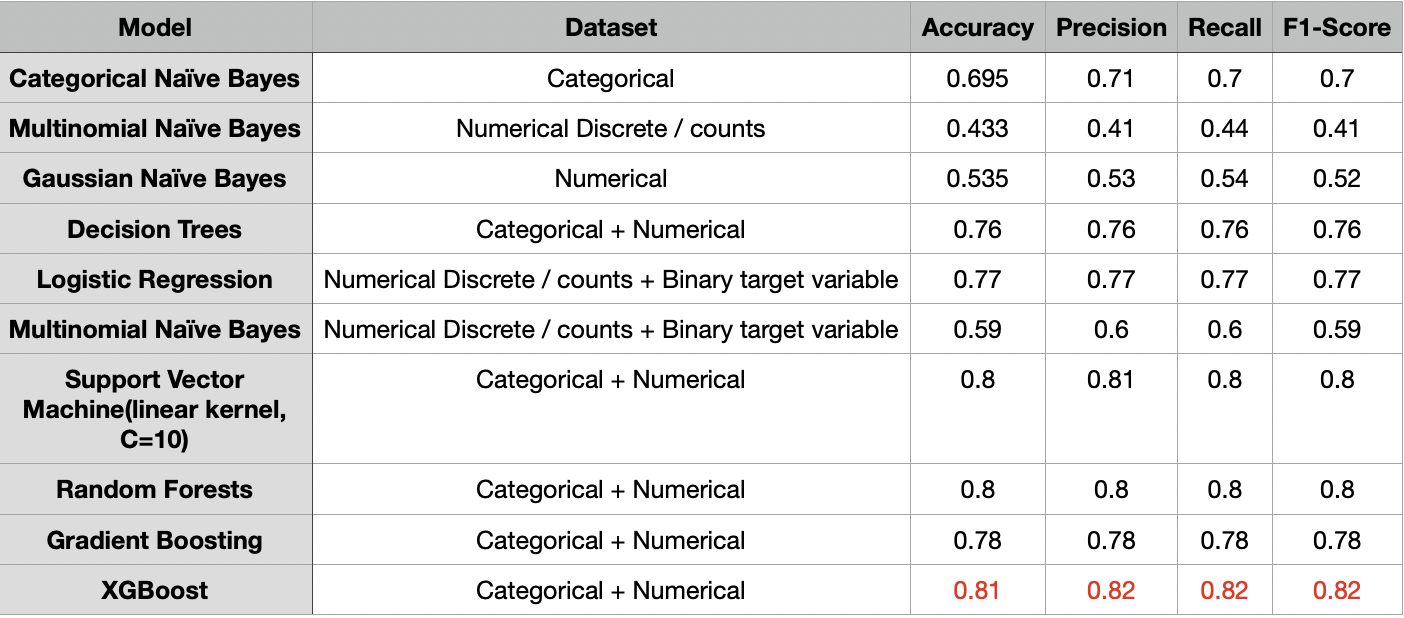

This section provides a comparative analysis of all the classification models implemented to predict football player market value categories. The performance is evaluated based on Accuracy, Precision, Recall, and F1-Score, considering the type of dataset used for each model.

Summary Table

The table below summarizes the performance metrics for each model:

Comparison of all models

Performance Analysis

Top Performers:

- XGBoost emerged as the top-performing classifier with an accuracy of 0.81 and F1-score of 0.82, leveraging its gradient boosting framework and regularization capabilities to effectively handle the mixed categorical and numerical features in the 3-class classification task. F

- Support Vector Machine (with a linear kernel and C=10) and Random Forests achieved identical performance metrics (0.80 for both accuracy and F1-score), with the SVM demonstrating that a properly tuned linear model can compete effectively despite not being an ensemble method, while Random Forests provided reliable results through its bagging ensemble approach that helps prevent overfitting.

Overall, the small performance differences suggest that all three models offer viable solutions for this classification problem, with XGBoost providing a slight edge in predictive power.

Moderate Performers:

The additional model evaluation results show that Gradient Boosting achieved moderate performance with an accuracy and F1-score of 0.78, trailing behind the top performers.

- Logistic Regression demonstrated reliable performance on a binary classification variant of the task, scoring 0.77 on both metrics and outperforming baseline models, which underscores its effectiveness when dealing with linear relationships in well-processed data.

- Decision Trees delivered respectable results with an accuracy and F1-score of 0.76 despite being a single model rather than an ensemble method, suggesting they captured meaningful patterns in the data while offering the added benefit of interpretability.

Lower Performers:

- The Naive Bayes models showed varied effectiveness, with Categorical NB performing best (accuracy: 0.695, F1-score: 0.70) by leveraging categorical variables like player position and nationality. - - - Gaussian NB delivered mediocre results (accuracy: 0.535, F1-score: 0.52) due to violated distribution assumptions.

- Multinomial NB struggled significantly on the 3-class task (accuracy: 0.433, F1-score: 0.41) and showed only modest improvement on binary classification (accuracy and F1-score: 0.59).